noris Sovereign Cloud - FAQ

Manage Kubernetes clusters with nSC

The noris Sovereign Cloud (nSC) automates management and operation of Kubernetes clusters as a service.

nSC is built using Gardener, and you can find more information in their documentation.

Please note that nSC is currently in internal-alpha-test and has not yet been released as GA.

We encourage you to try nSC, but keep in mind that we are unable to provide SLAs for this product at this time.

This guide will walk you through the steps to create, access and manage a Kubernetes cluster with nSC.

Log in to the Gardener dashboard with your Wavestack account.

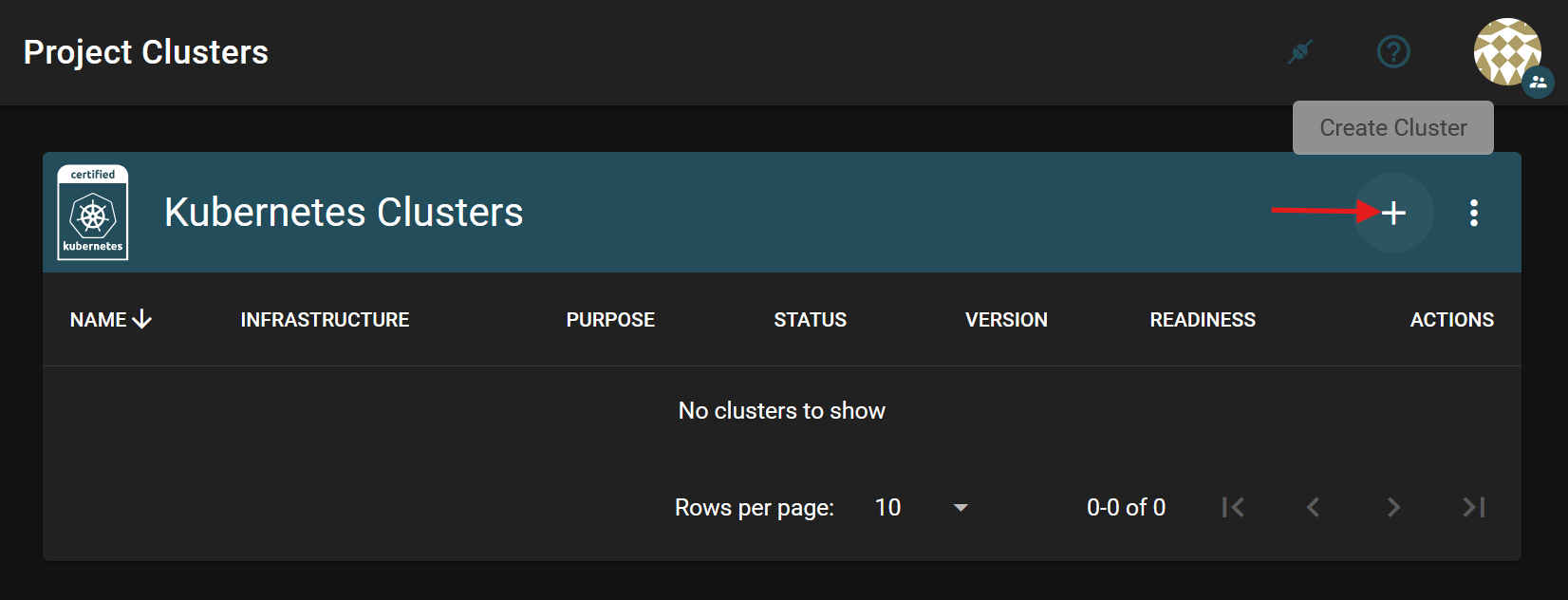

Click the + button at the top to begin creating a new Kubernetes cluster or “shoot” in Gardener terminology.

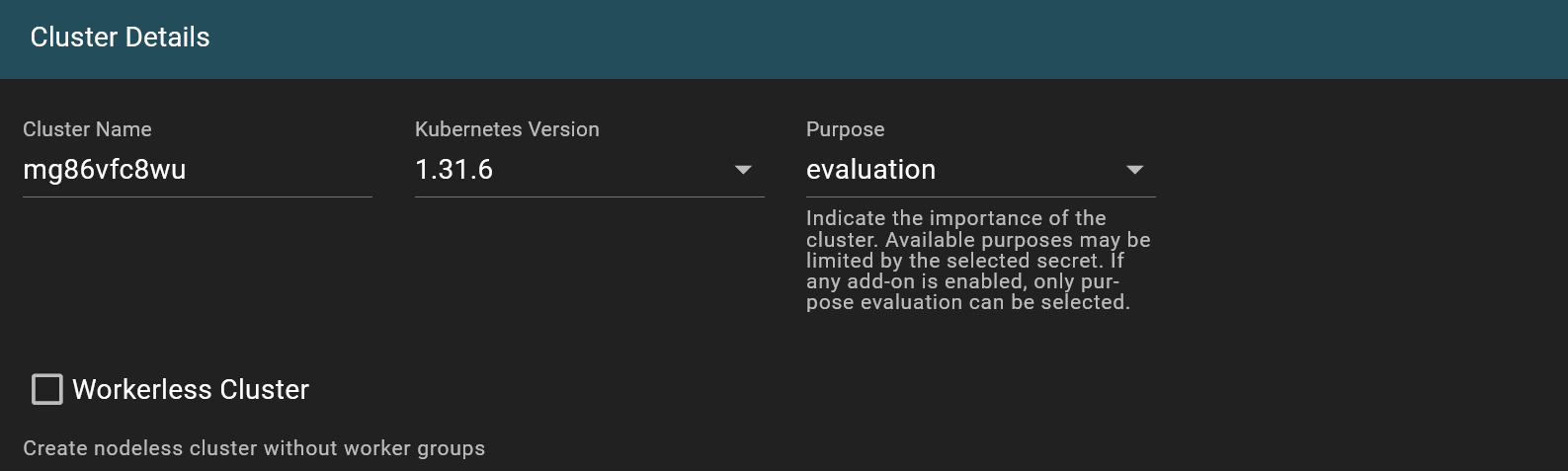

The cluster configuration wizard allows you to customize cluster settings according to your needs. For better readability only settings that usually need user attention are documented.

Gardener supports the following providers:

In this section, you can customize various cluster settings:

Gardener will generate a random default name for your cluster, or you can specify your own.

Clusters can be created with different Kubernetes versions. It’s recommended to always use the latest supported version. Please note our policy on supported kubernetes versions

This option indicates the intended use or production-readiness level of the cluster. For more information on the different configurations based on purpose, refer to shoot purposes.

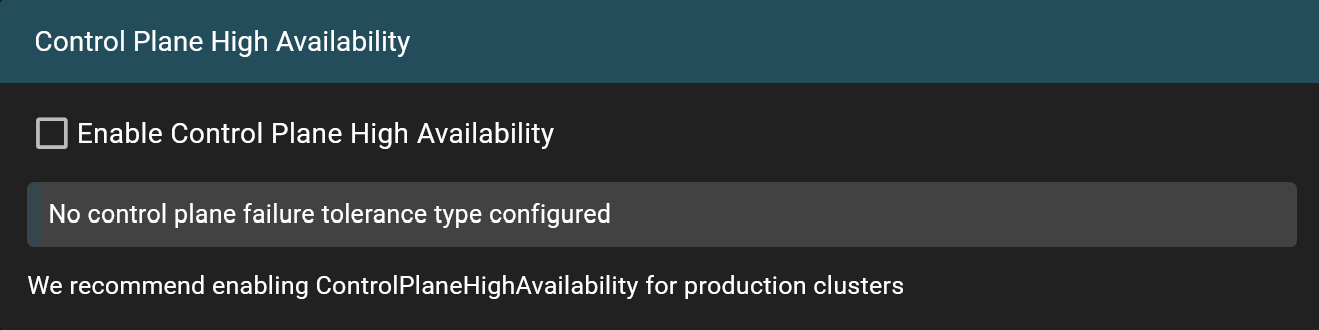

nSC supports HA control planes. Enabling this feature will setup a three instance etcd cluster, distributed over our datacenters within the same geolocation. If disabled, a single instance etcd cluster will be used instead. For production clusters, we recommend enabling ControlPlaneHighAvailability to ensure greater resilience.

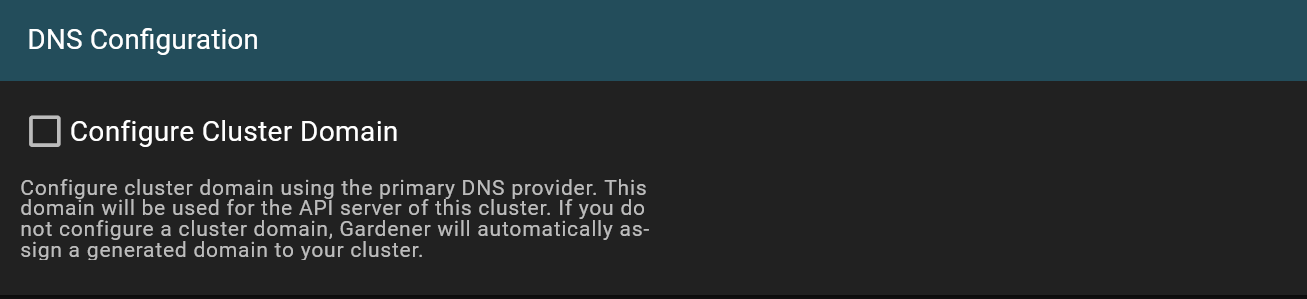

This is advanced configuration which is explained in further detail below. Beginners can likely ignore this.

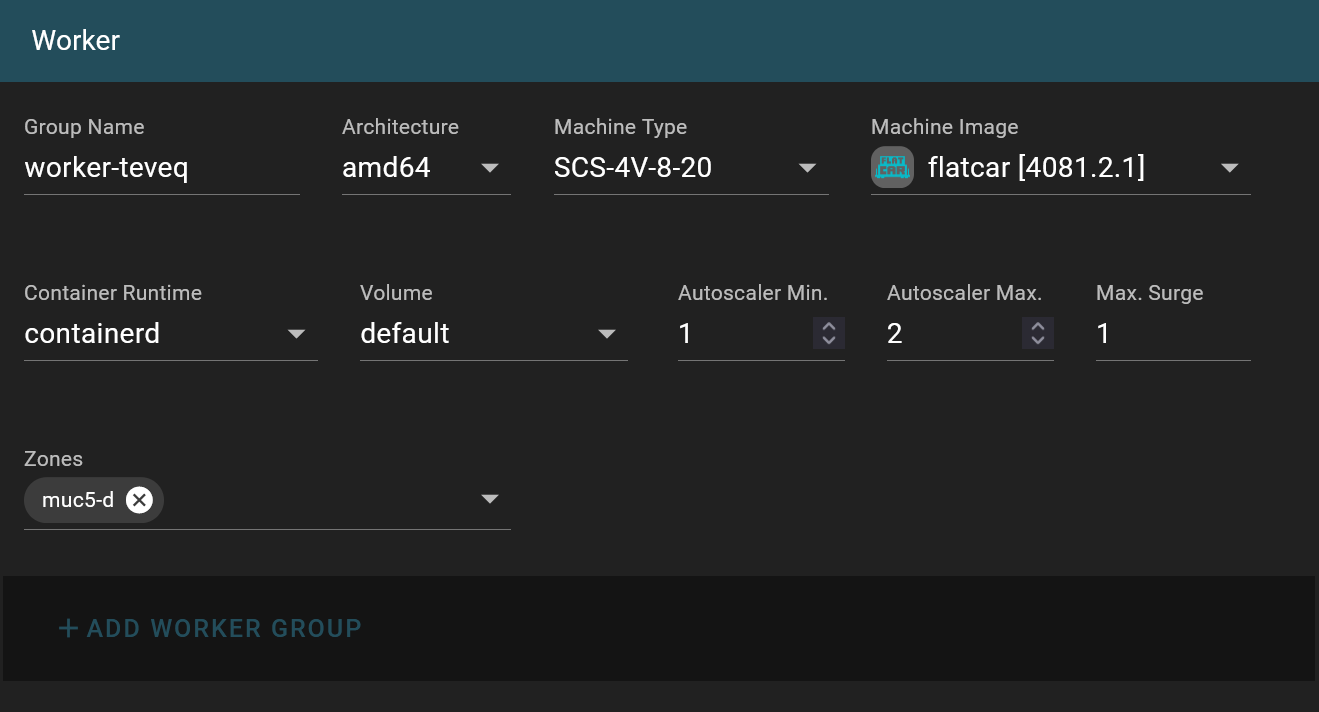

Gardener will generate a random default name for your worker-group, or you can specify your own.

Choose the machine type for your worker nodes. Wavestack follows the Sovereign Cloud Stack naming conventions:

Similar to Kubernetes version this specifies the operating system image. It’s recommended to always use the latest supported version. Please note our policy on supported machine images

Clusters with at least one worker group having minimum < maximum nodes will have an autoscaler deployment, allowing dynamic scaling of worker nodes based on demand.

The autoscaler is a modified version of the Kubernetes cluster-autoscaler with additional support for gardener/machine-controller-manager.

nSC requires clusters to consist of at least two nodes.

Similar to Control Plane High Availability, you can also distribute your workers across different data centers within the same geolocation. Keep in mind that PVC storage is specific to each zone and not replicated between them. If you require replicated storage, consider our S3 storage.

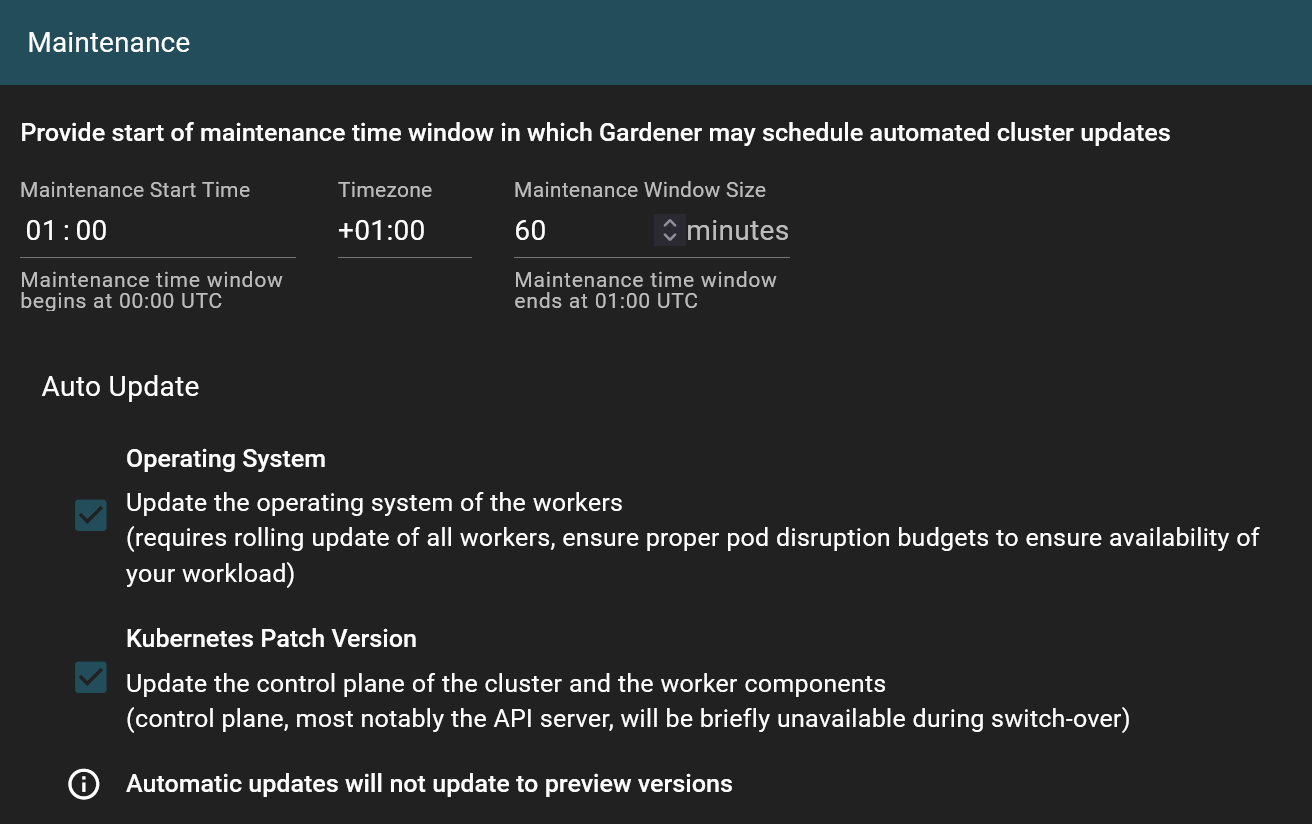

Gardener configures an automated update window for tasks like:

The default value of this time frame may differ between cluster creations.

For more details, refer to shoot maintenance in the Gardener documentation.

If your cluster doesn’t need to run all the time, you can configure a hibernation schedule to automatically scale down all resources to zero.

Please notice that hibernation is subject to fair use and may be restricted at any time.

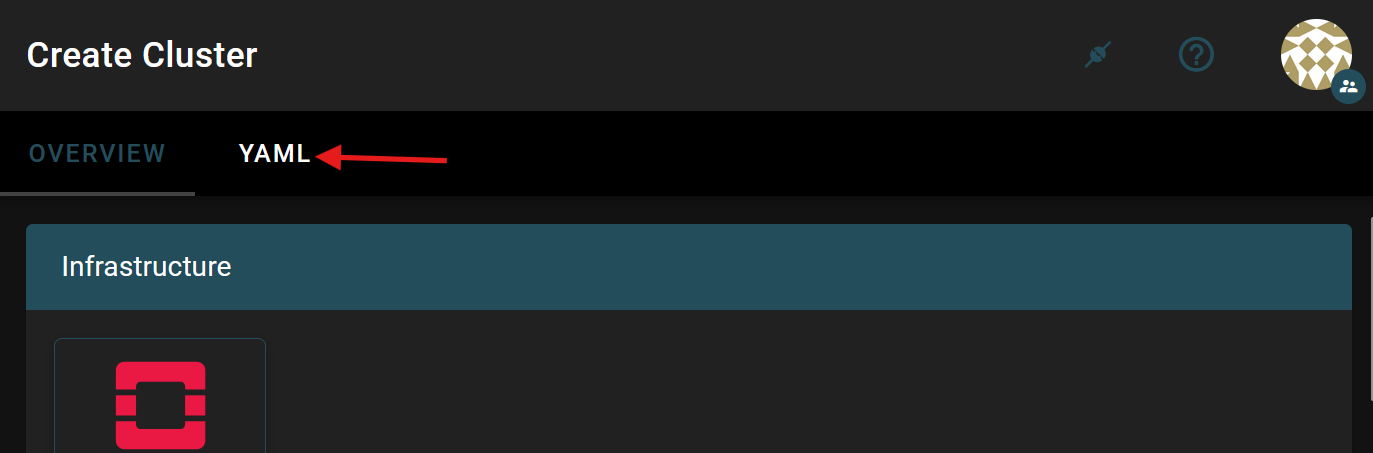

You can edit the generated Gardener custom resources directly by selecting the YAML tab. This gives you the option to specify settings not available in the configuration wizard. Later parts of this guide will often ask you to add code snippets in your shoot’s declaration, this is what they are meant for.

The full range of options is showcased in the upstream example shoot config. OpenStack specific settings are documented here

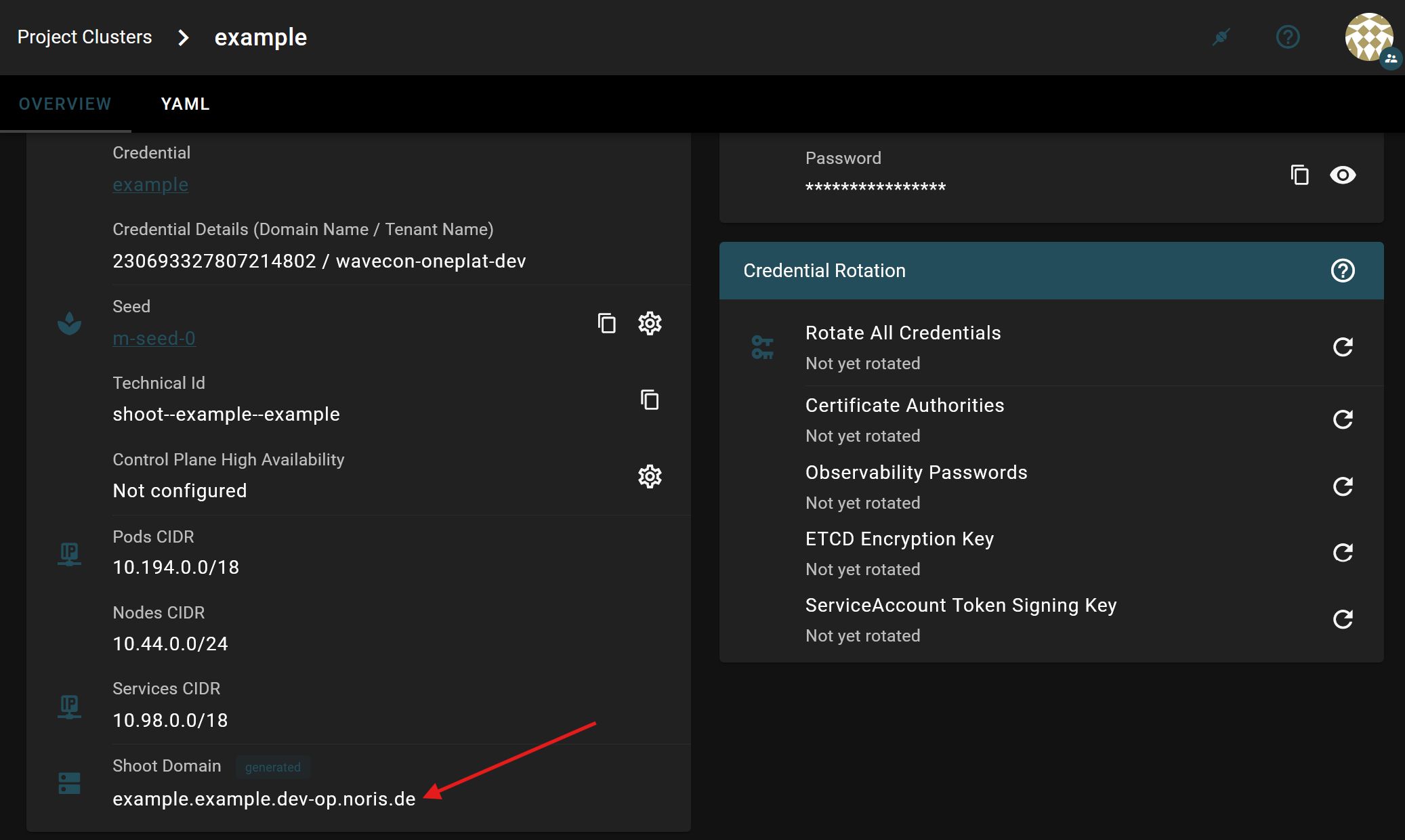

The default network layout for shoot clusters is the following. This can be changed in the shoot’s specification:

10.44.0.0/24 for nodes

10.98.0.0/18 for services

10.194.0.0/18 for pods

Clusters created with these networking ranges limit the size of the cluster to:

110 pods per node

60.500 services

60.500 pods

254 nodes

To increase these limits, set the networking ranges in the shoots YAML configuration appropriately during shoot declaration. These settings can’t be retroactively changed in existing clusters, so please take care to configure them appropriately during cluster creation. Please refrain from using any of the following prefixes which are reserved for our infrastructure:

10.42.0.0/15

10.96.0.0/15

10.192.0.0/15

To follow this guide, you need to have the following tools installed:

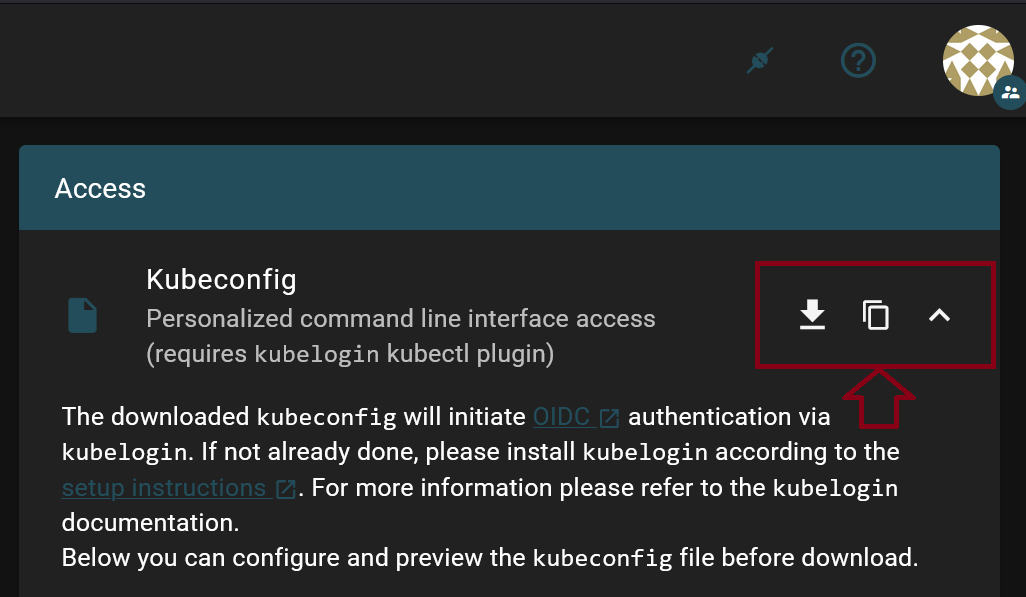

Once the cluster has finished bootstrapping, you can set up kubectl access.

Gardener supports secure authentication via OIDC with gardenlogin and kubelogin. gardenctl can serve as a replacement for gardenlogin, but for simplicity of this guide, only gardenlogin will be explained.

Create the configfile ~/.garden/gardenctl-v2.yaml with the following content:

gardens:

- identity: dev

kubeconfig: ~/.garden/kubeconfig-garden.yaml

This kubeconfig referenced in gardenctl-v2.yaml can be configured and downloaded here.

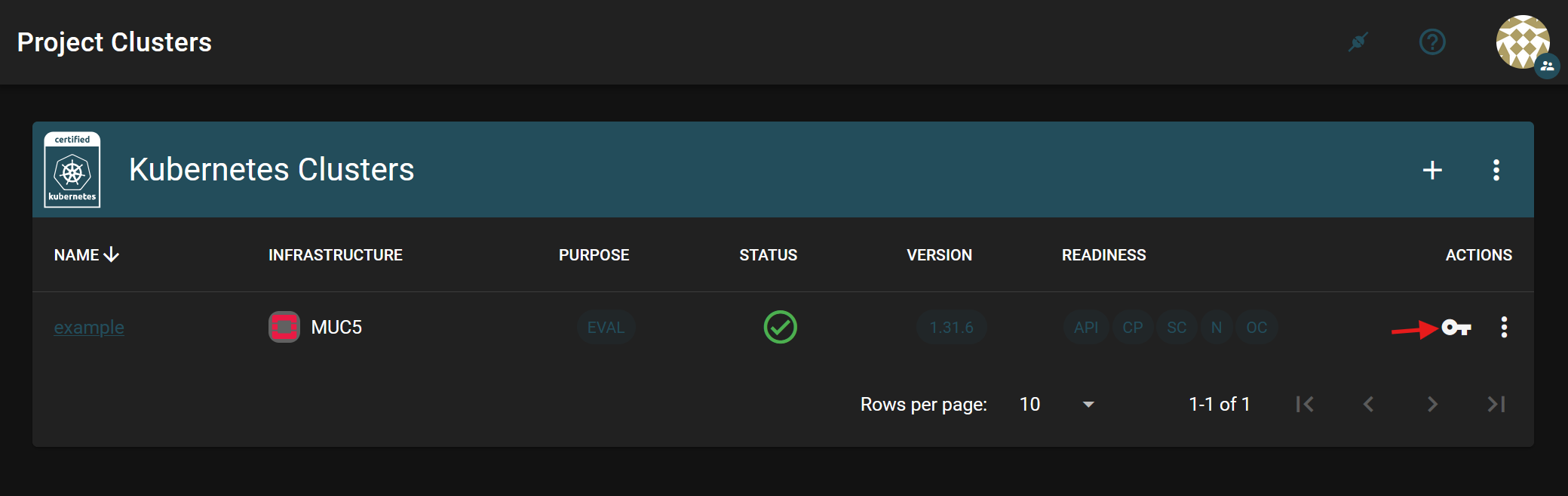

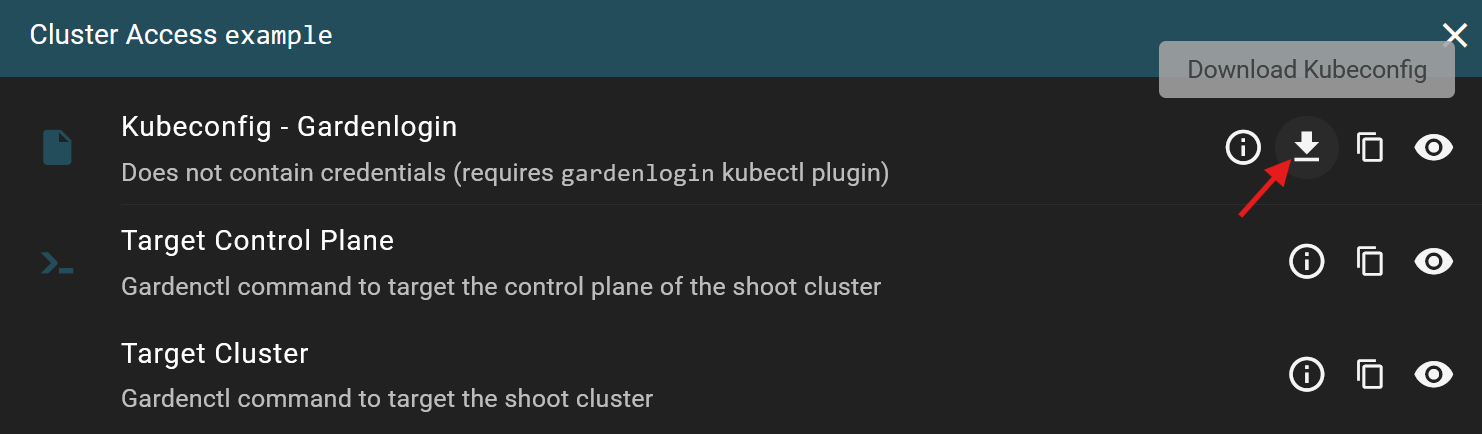

You can download the appropriate kubeconfigs for your clusters from the cluster overview page by clicking on the key symbol.

Download the Kubeconfig - Gardenlogin file.

Save it in the ~/.kube/ directory.

If you’re managing only a single cluster, you can rename the file to ~/.kube/config. Otherwise, you can configure kubectl to use a specific file by setting the KUBECONFIG environment variable.

❯ export KUBECONFIG=~/.kube/kubeconfig-gardenlogin--<project_id>--<cluster_name>.yaml

After successful installation of the required tools, you should be able to find the following binaries:

Additionally, the following files were created:

Check your available nodes by running:

❯ kubectl get nodes

NAME

shoot--example--example-worker-r6tfb-z1-76469-ttt8g

shoot--example--example-worker-r6tfb-z1-76469-xb9dv

You can now utilize your new nSC kubernetes cluster.

Our supported versions can be easily identified by the “supported” flag during cluster creation. In alignment with the upstream Kubernetes project, our goal is to support the latest patch release of the three most recent Kubernetes minor versions. The latest minor version may initially be available only as a preview while we work on adding full support. While we have confidence in the quality of our preview releases, we advise against using them for critical tasks and do not provide SLAs for them.

Older patch and minor releases will be flagged as deprecated, attaching an expiration date to them. If you configured automatic kubernetes upgrades, your shoot clusters be upgraded in the next maintenance window.

On reaching said expiration date, shoot clusters using that deprecated kubernetes versions will be force-upgraded to a supported version regardless of the automatic update configuration. Upstream kubernetes actively deprecates and removes legacy functions. It is recommmended to keep up with the upstream deprecation notices and to test upgrades preemptively.

Our supported version is easily identifyable by the “supported” flag during cluster creation. We aim to support the latest flatcar LTS release and keep the lastest 3 images available for installation. The latest minor version may initially be available only as a preview while we work on adding full support. While we have confidence in the quality of our preview releases, we advise against using them for critical tasks and do not provide SLAs for them.

Older releases are flagged as deprecated attaching an expiration date to them. If you configured automatic OS upgrades, flatcar worker nodes running deprecated flatcar releases will be upgraded in the next maintenance window.

On reaching said expiration date, worker nodes using these deprecated flatcar OS images will be force-upgraded to a supported version regardless of the automatic update configuration. While impact of OS image upgrades on container workload is fairly seldom, we advice to keep track of upstream deprecation notices and to test upgrades preemptively.

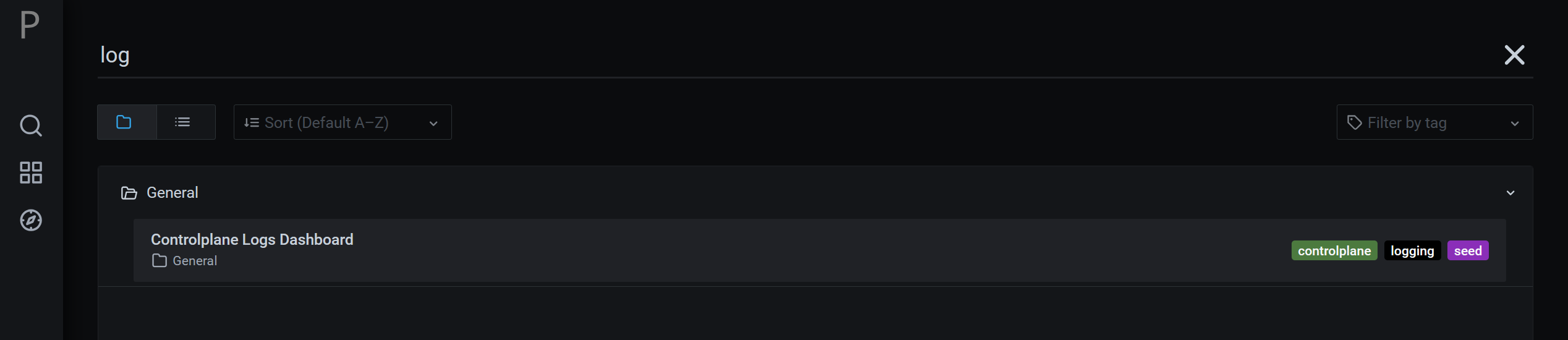

Controlplane logs can be easily accessed through the web interface:

Search for “log”:

You can configure email receivers in the shoot spec to automatically send email notifications for predefined control plane alerts.

Example configuration:

spec:

monitoring:

alerting:

emailReceivers:

- <your_email_address>

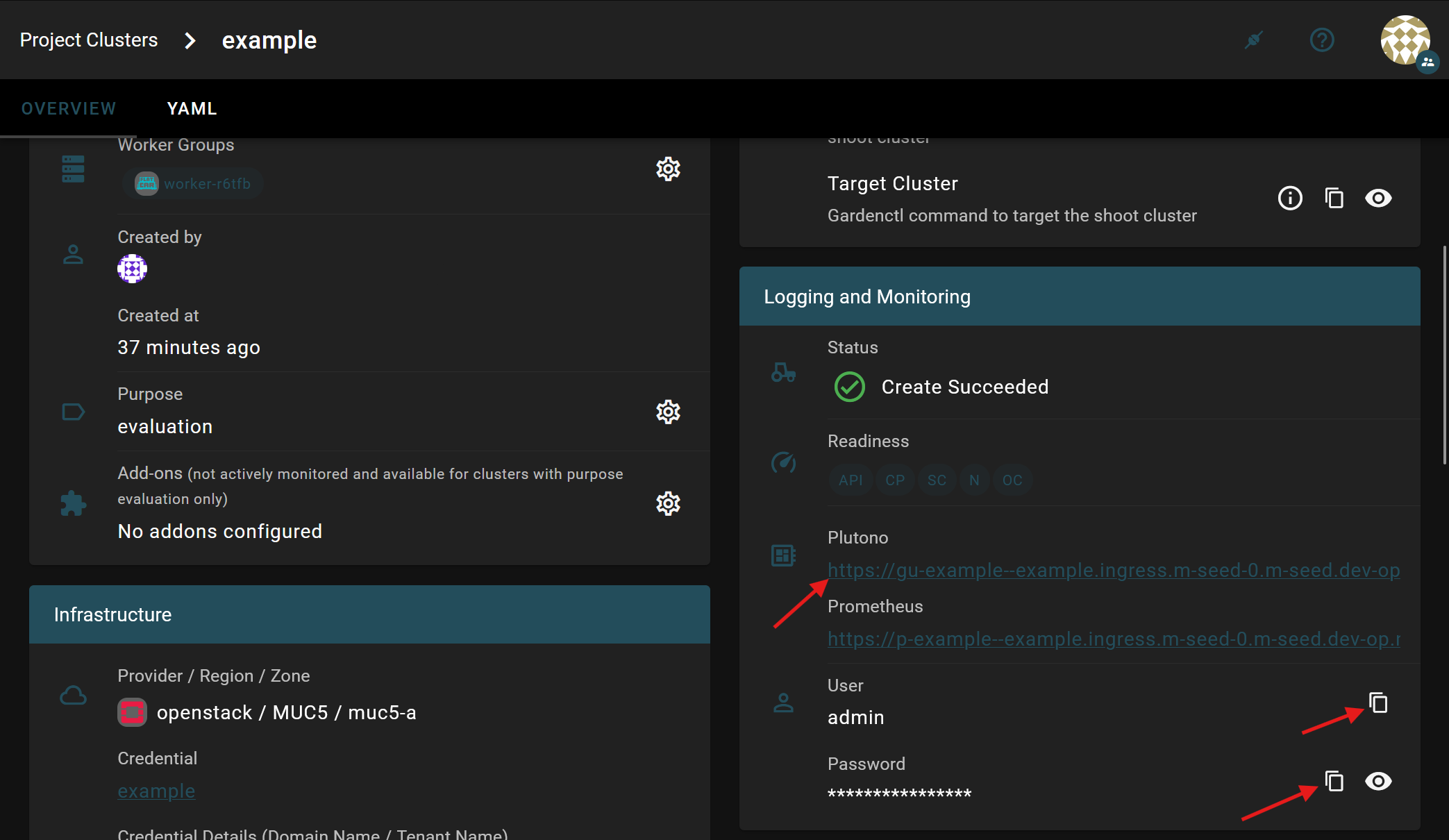

If you require more customized alerts for control plane metrics, you can deploy your own Prometheus instance within your shoot’s control plane. Using Prometheus federation, you can forward metrics from the Gardener-managed Prometheus to your custom Prometheus deployment. The credentials and endpoint for the Gardener-managed Prometheus are provided via the Gardener dashboard.

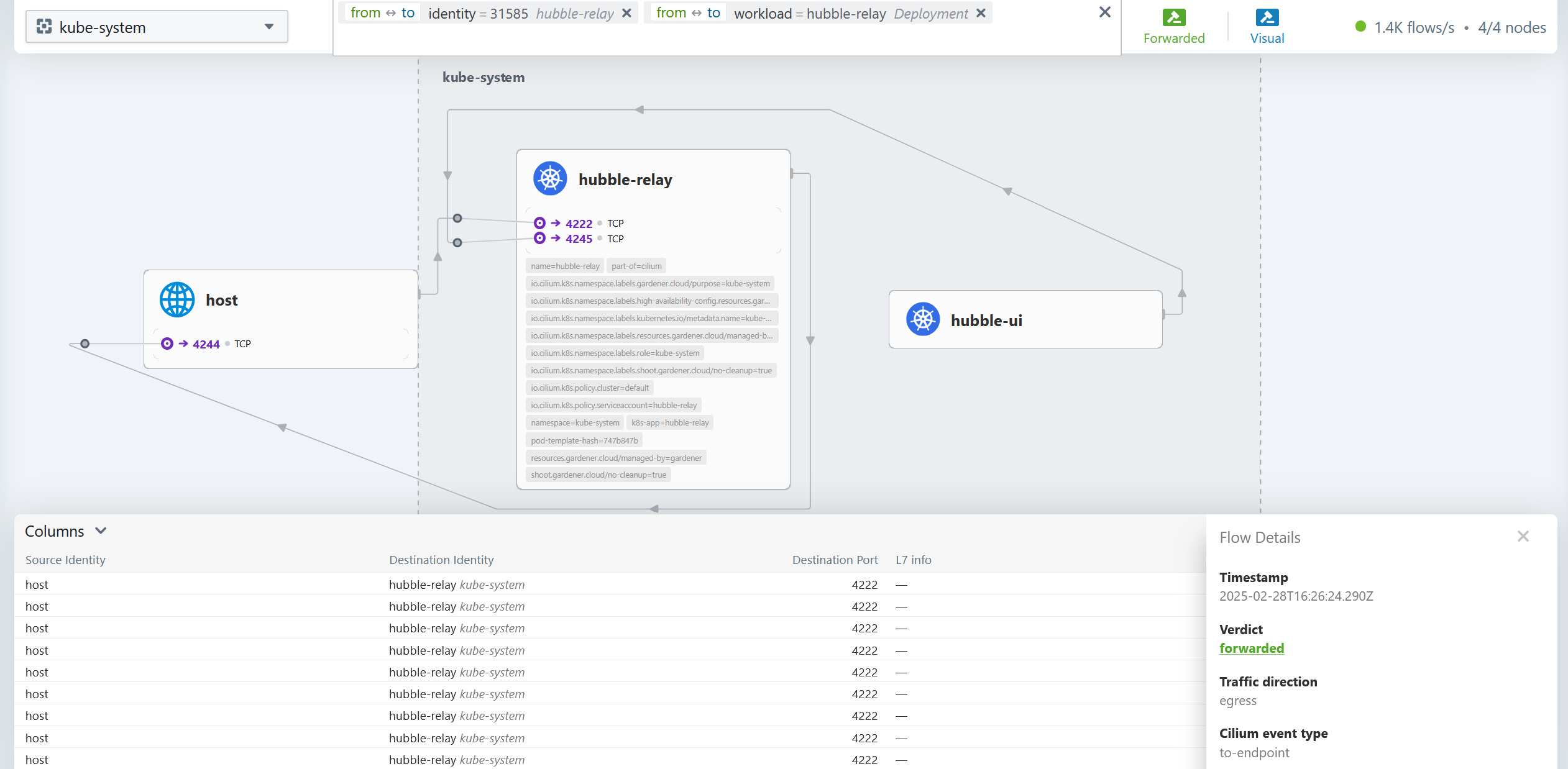

Our default CNI cilium offers a free network observability feature called hubble:

To utilize it, add the following to your shoot declaration:

spec:

networking:

type: cilium

providerConfig:

overlay:

enabled: true

hubble:

enabled: true

Afterwards, running the following code will allow you to access a webfrontend of your network flows on your local machine. The cilium binary can be obtained here.

❯ cilium hubble ui

ℹ️ Opening "http://localhost:12000" in your browser...

nSC allows the user to create a server group by the following shoot declaration add-ins. These make nSC aware of the cluster and can be useful to spread the workload over multiple hardware nodes.

spec:

provider:

type: openstack

workers:

- ...

providerConfig:

apiVersion: openstack.provider.extensions.gardener.cloud/v1alpha1

kind: WorkerConfig

serverGroup:

policy: soft-anti-affinity

Our CRI containerd supports the use of registry mirrors. This feature is helpful for bypassing registry rate limits (e.g., hub.docker.com) and integrating a centralized container security scanner. Here’s an example of how to implement this:

spec:

extensions:

- type: registry-mirror

providerConfig:

apiVersion: mirror.extensions.gardener.cloud/v1alpha1

kind: MirrorConfig

mirrors:

- upstream: docker.io

hosts:

- host: "https://mirror.gcr.io"

capabilities: ["pull"]

Per default, our openstack-designate creates a subdomain for your cluster:

Please refer to this upstream documentation for instructions on how to automatically create DNS records that enable your services to be publicly accessible under this subdomain. In case your usecase is even more advanced, be sure to check out the complete upstream documentation.

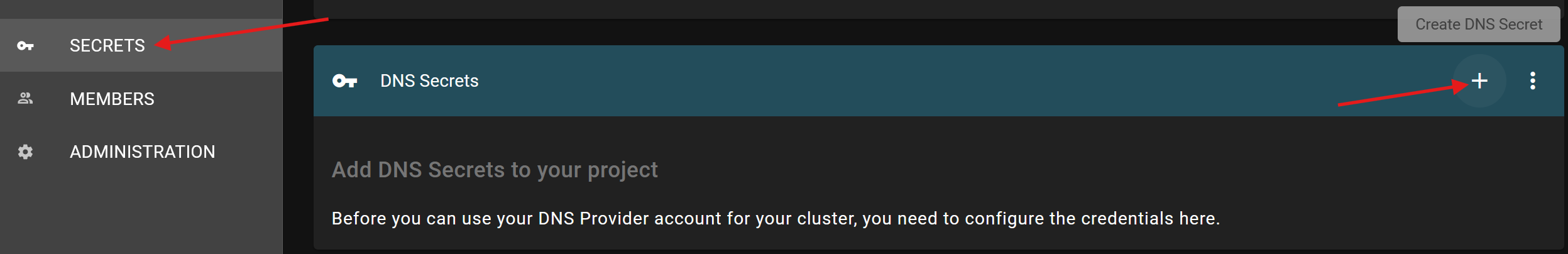

Using your own subdomain can be achieved in different ways:

If you want to federate a subdomain of your own domain to our openstack-designate, please open a support ticket.

Additionally, gardener can manage the following DNS providers. Please note that we can’t offer any SLA’s regarding compatibility with external DNS servers:

nSC allows the user to comfortably request free certificates via DNS-based Let’s Encrypt challenges. This feature depends on properly setup DNS, so we strongly advice to follow that section of the guide first. This documentation covers, how to set up certificates for the default subdomain. For advanced usecases, check out the complete upstream documentation.

Please note that nSC does not back up customer-generated content. It is the customer’s responsibility to back up worker node objects such as metadata (e.g., services, ingresses, pods, etc.), the content of PVs (e.g., prometheus-01), and S3 buckets. Velero is a popular open-source tool that can assist with this task.

However, nSC does take responsibility for backing up control-plane content, including the shoot’s etcd, on a regular basis. Please specify the etcd resources that need to be encrypted.

SSH access can be disabled by specifying the following setting in the shoot declaration:

spec:

provider:

workersSettings:

sshAccess:

enabled: false

Shoot declarations can specify which etcd fields need to be encrypted:

spec:

kubernetes:

kubeAPIServer:

encryptionConfig:

resources:

- configmaps

- statefulsets.apps

- customresource.fancyoperator.io

Full documentation on the etcd encryption feature can be found here

nSC allows users to change the default seccompProfile from the unrestricted Unconfined to the more restricted RuntimeDefault by specifying the following in your shoot declaration:

spec:

kubernetes:

kubelet:

seccompDefault: true

nSC allows users to configure their podsecurity admission defaults in the shoot declaration. These are used if mode labels are not set by an application:

spec:

kubernetes:

kubeAPIServer:

admissionPlugins:

- name: PodSecurity

config:

apiVersion: pod-security.admission.config.k8s.io/v1

kind: PodSecurityConfiguration

# Level label values must be one of:

# - "privileged" (default)

# - "baseline"

# - "restricted"

defaults:

enforce: "baseline"

audit: "baseline"

warn: "baseline"

exemptions:

# Array of authenticated usernames to exempt.

usernames: []

# Array of runtime class names to exempt.

runtimeClasses: []

# Array of namespaces to exempt.

namespaces: []

Due to the service’s dynamic nature, the actual costs can vary significantly based on factors such as cluster size, storage usage, load balancers, public IP reservations, hibernation and the utilization of the auto-scaling feature.

The minimal estimate for the smallest possible cluster (in cloud points) is as follows:

A cluster must always include at least two nodes. This minimal configuration does not require additional load balancers, public IPs or PVC storage, though these can be added by the user as needed.

This section contains specific information about achieving C5 compliant shoot clusters. It may also provide helpful suggestions for general security improvements. Please contact your sales representative for consulting quotas.

Disabling SSH is likely a step in the right direction. Our containers are pulled through a container registry cache that implements vulnerability scanning. Customers can utilize the same mechanism for their own registry cache (e.g., Harbor). Our default OS image, Flatcar, is immutable after the initial boot.

This topic is explained in Data Backup and Recovery. etcd encryption is documented here.

The Gardener platform can be extended with the following additional extensions. If you’d like us adding this functionality, please request a quote.

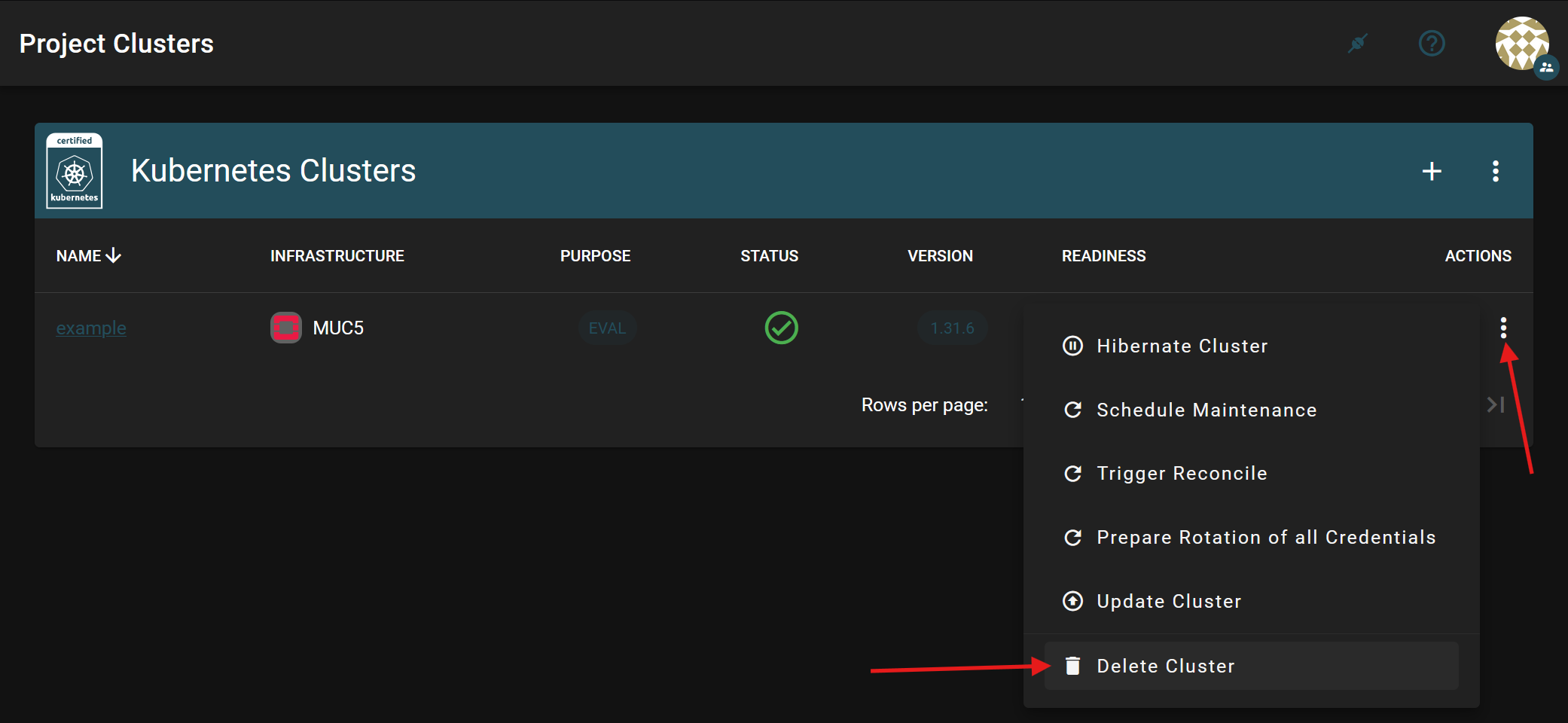

To delete a cluster, click on the three dots on the left side of the cluster overview and select “Delete Cluster”

Manage Kubernetes clusters with nSC